Running Facebook ads without A/B testing? Skipping A/B testing can burn a hole in your ad budget.

You’ve probably heard the saying that marketing is part art, part science. A/B testing is the recipe that brings it all together. Sure, you might be able to bake a cake without a recipe, but chances are you’ll have to bake a whole lot before you get a cake.

Meta A/B testing helps you turn guesswork into a winning strategy. This guide will walk you through everything you need to know to get the most out of your Meta campaigns.

Why A/B Testing Matters For Performance

Campaign performance varies greatly depending on factors like industry, target customer profile, and the length and complexity of the sales cycle. For example, a SaaS business typically operates on a longer sales cycle and may prioritise lead nurturing through educational content, webinars, and free trials.

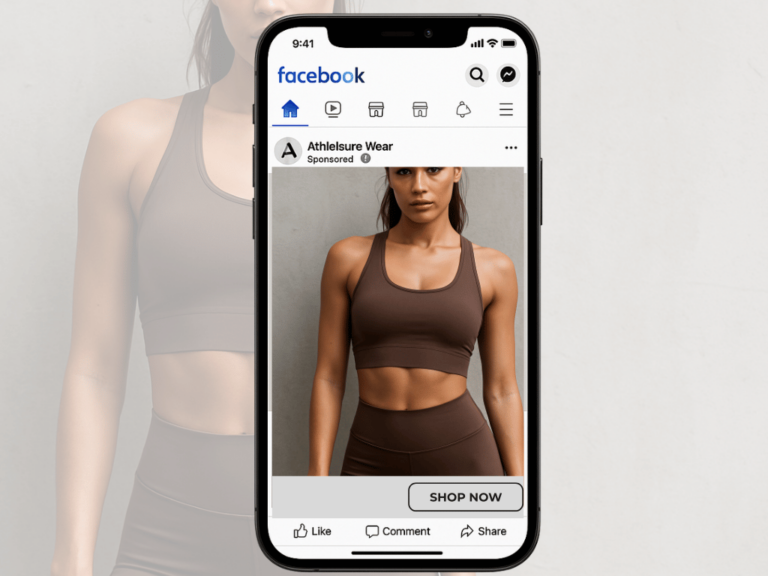

In contrast, an e-commerce brand typically aims for quick conversions, utilising limited-time offer promotions or product-focused ads to drive immediate sales.

A/B testing helps refine these strategies by identifying what works best with your specific audience. It involves testing two variations of a single campaign element, such as ad copy, imagery, or CTAs (call-to-actions), to determine which performs better.

Best Practices for Split Testing on Meta

Before you hit the ground running with testing, keep these best practices in mind to help you get the results you’re aiming for:

Focus on Only One Variable at a Time

This ensures that you understand how the variable affects your campaign. For example, if you changed the image and the headline, how would you know which gave you the best results?

Have Controlled Variables

As with any experiment, the conditions of the experiment need to be controlled to give you accurate results. Variables that are not being tested need to be kept the same. This means that the audience size and background need to be the same, as well as showing them the ad at the same time for the same length of time.

For instance, if you were testing a CTA, but shared it with audience segments with different interests or showed it to them in different months when market conditions were different, you’d have inaccurate results.

Evaluate Success Beyond Conversions

Be mindful about getting hung up on conversion rate metrics. A/B testing can uncover long-term revenue gains.

As an example, imagine you’re running two Meta ad variations for a SaaS product. Version A has a strong CTA that drives quick sign-ups, while Version B has a softer CTA that leads to fewer sign-ups but engages more, stays subscribed longer, and ultimately spends more over time.

If you were only considering the initial conversion rate, Version A would appear to be the winner. But by tracking metrics like customer lifetime value (LTV) or retention rate, you’d see that Version B actually drives more revenue in the long run. A/B testing gives you the insight to optimise for true business growth, not just short-term wins.

Consistency Is Key

Markets, audiences and platform algorithms are always evolving. Regular testing and refining keep your campaigns effective and relevant.

Define Your Goals

Having a clearly defined goal of what you want to accomplish through A/B testing is key to optimising performance. Whether you want to drive traffic or increase engagement, knowing your objective helps guide the test design and tells you which metrics to track.

For example, if you wanted to increase traffic, monitoring landing page views and click-through rates would let you know if your ad performed well.

Key Variables to Test in Meta Campaigns

Meta’s built-in A/B testing tools give you the flexibility to test one or more variables at a time, helping you optimise your campaigns based on real performance data. Here are a few key variables you can experiment with:

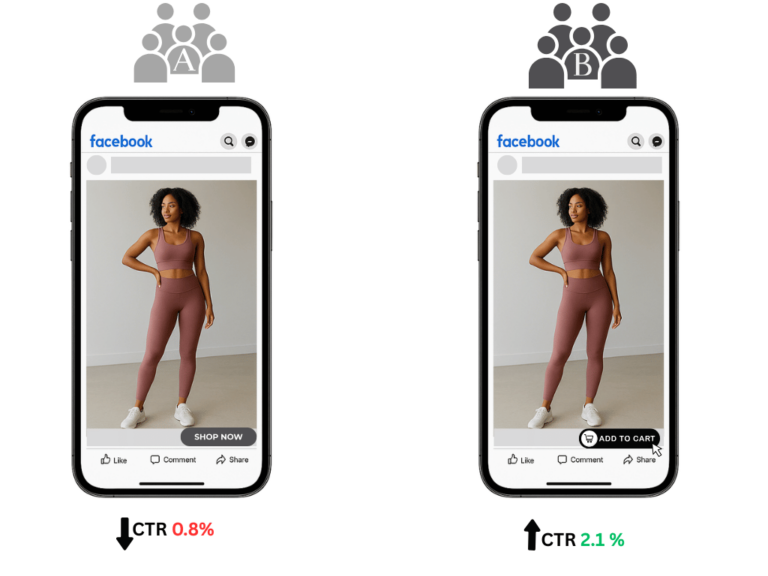

Audience Variations

Compare different audience segments to see which delivers better results. For example, testing a demographic segment against an interest-based one.

To ensure a statistically valid and reliable test, you should choose an optimal size audience for your test. Too big a group might dilute the impact of your variations, and too small may not give you enough data. As a general guideline, a target audience size that is between 10% and 20% of your total audience is a good starting point.

It’s not enough to simply note that one offer outperformed another within a particular audience segment. As a general guideline, the most valuable insights come from asking deeper questions.

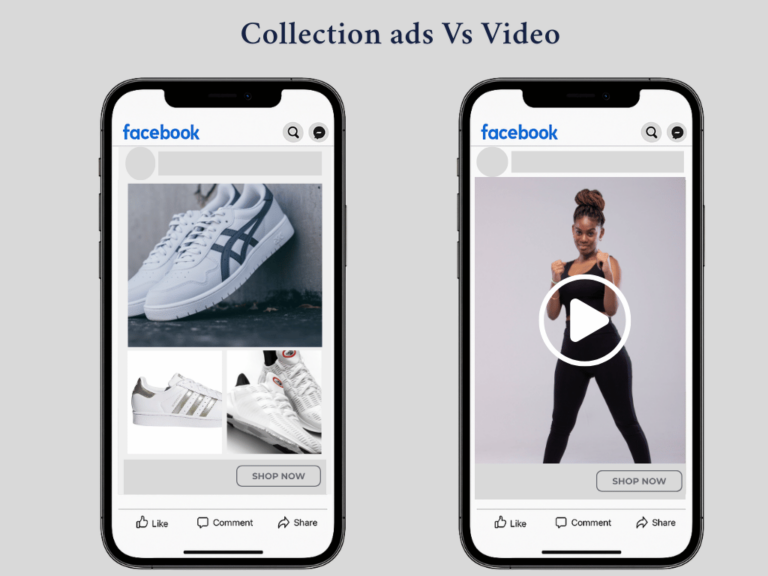

Ad Copy and Visuals

This involves testing creative elements in different formats, orientations, or with different messaging. For instance, you could compare singular images to a carousel, for two different call-to-actions to see which one resonates most with your audience.

If your current copy and visuals aren’t hitting the mark, partnering with a paid ads agency can elevate your strategy by combining creative design with ongoing competitor analysis, market research, and platform expertise to ensure every ad is built to perform.

Before testing, bear in mind that ads should consistently reflect your brand’s colours, tone, and personality to build recognition and trust. As a starting point, create a strong, personalised, on-brand ad as your baseline, and then test specific variables incrementally.

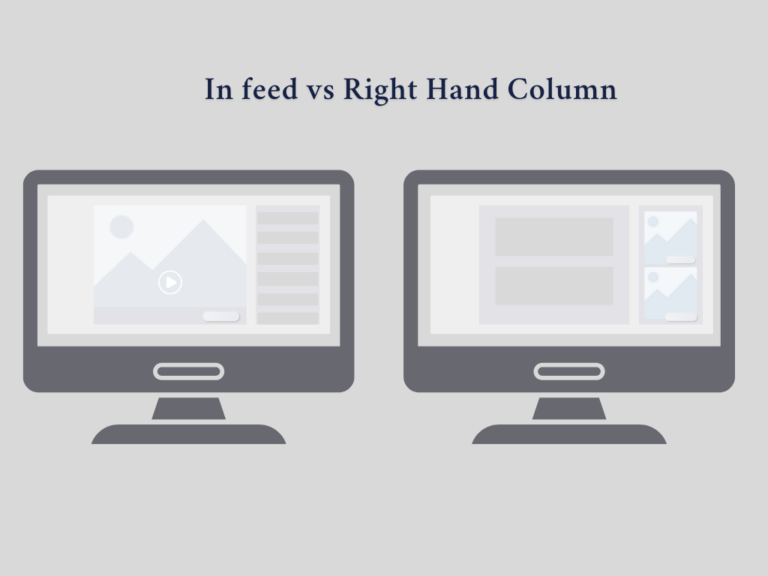

Placement Best Practices

This variable lets you compare different ad placements, such as in-feed versus Stories, to determine where your ads perform best. To test placements, choose them as your test variable.

Meta recommends a 50% split between Advantage+ placements and Facebook Feed placements. Furthermore, they recommend running the test for between 3-14 days.

Meta advises to keep the following in mind when testing placement variation:

More Placements Yield Better Results

Running your ads across multiple placements, such as Facebook, Instagram, Messenger, and Audience Network, tends to be more cost-effective. Meta’s system works better when it has more places to deliver your ad, helping you reach more people for less.

Focus on Cost Per Outcome

A placement might seem more expensive, but could still contribute to better overall results. Also, consider average daily reach; more placements can help you reach new people without raising costs.

For example, Instagram Stories typically have a higher Cost Per Click (CPC) than Facebook Feed ads. At first glance, Instagram stories appear more costly to run, but overall, they could have a greater impact on your overall strategy by increasing reach and conversions.

Step-by-Step Guide on Using Meta’s Toolbar

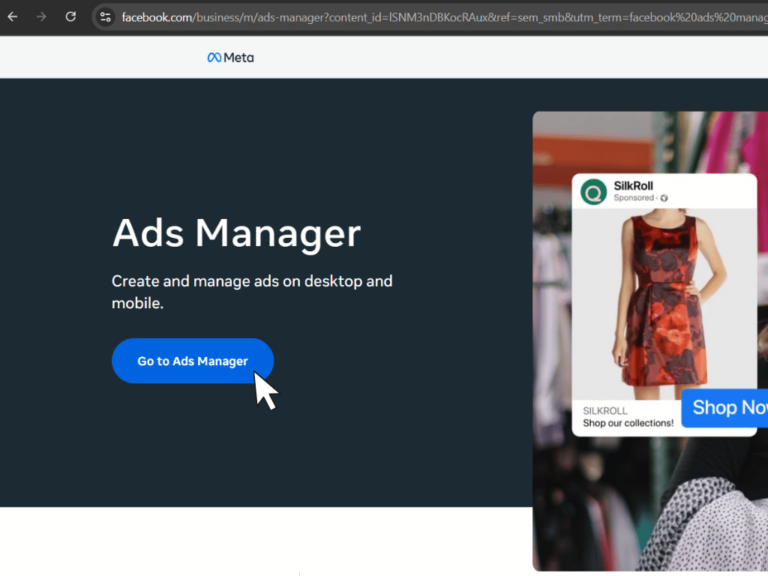

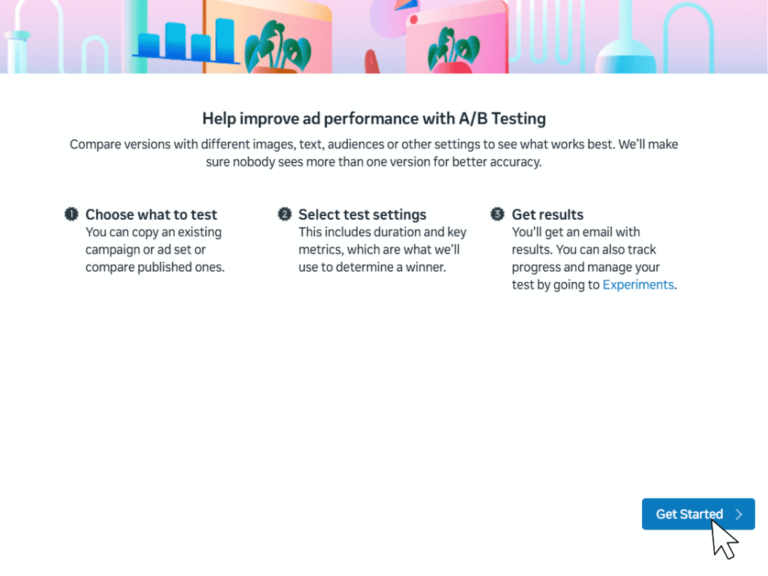

Meta offers several ways to create A/B tests depending on what you want to test (e.g., creative, audience, placements).

There are other methods in which you can create a test, such as by creating a new campaign or by duplicating an existing campaign.

To use the toolbar method:

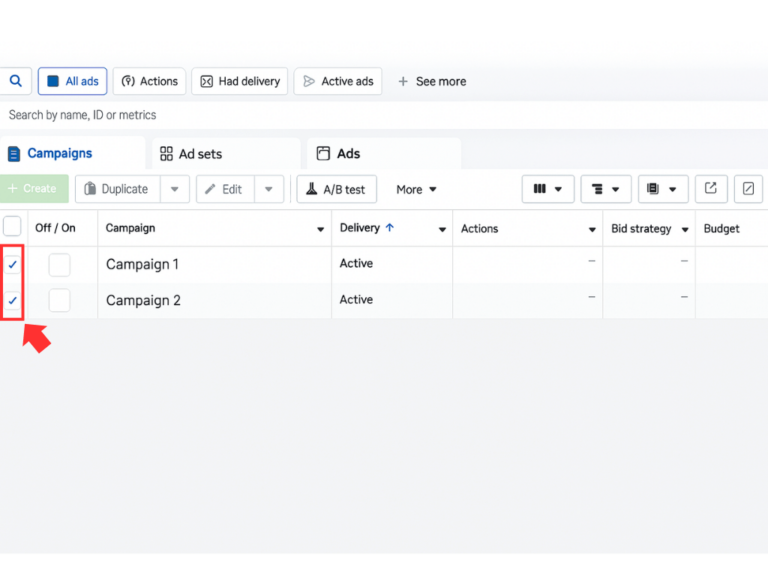

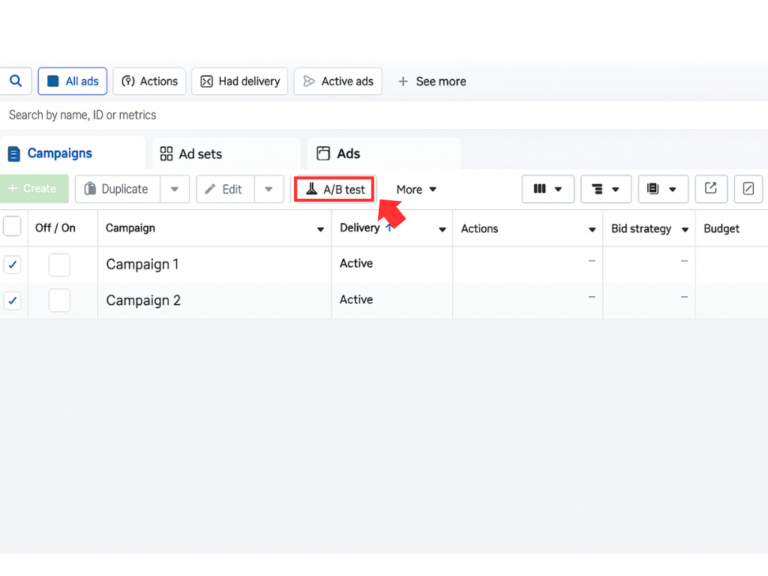

Step 1: Open the main table in your Ads Manager dashboard, where you’ll see a list of all your active ads, campaigns, and ad sets.

Step 2: Select the checkbox next to the campaign/s or ad set/s you want to include in your A/B test.

Step 3: Then, click ‘A/B Test’ in the toolbar at the top.

Step 4: Choose the variable you’d like to test and follow the guided setup steps provided.

View Results and Determine the Winner

Your A/B test results can guide your business to make informed decisions for your existing campaign performance as well as help shape your strategies for future campaigns.

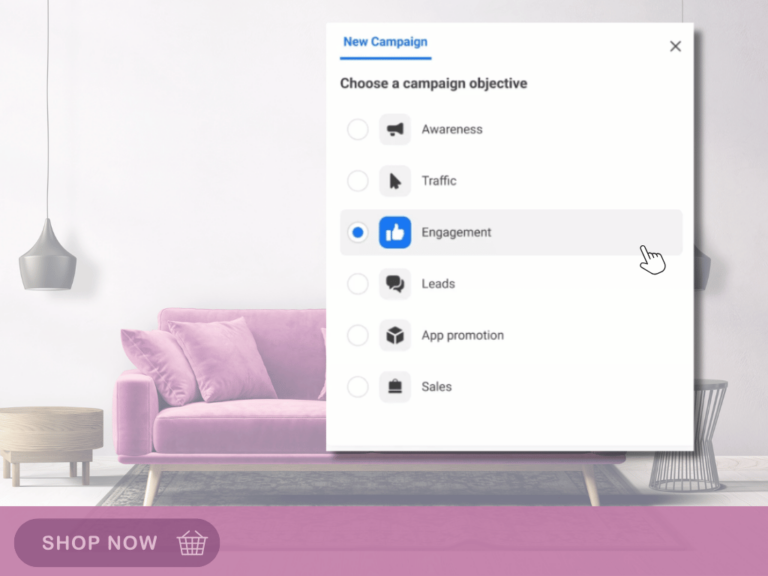

Once the test concludes, review the performance of each variant by focusing on the metrics that align with your campaign goals. Meta’s reporting tools make it easy to compare results, helping you identify the top-performing version and track what aligns with your objectives. When setting up your ad, Meta allows you to select from 6 objectives: awareness, traffic, leads, app promotion, engagement and sales.

Below are some examples of metrics to prioritise depending on your objective when interpreting your results:

Sales → Conversion rate, Return on Ad Spend, cost per conversion and click-through-rate.

Leads → Conversion leads, cost per conversion lead, conversion lead rate.

Engagement → Post engagement in the form of likes, comments, shares, and link clicks.

App Promotion → Focus on installs, in-app actions, and cost per install.

Traffic → Use metrics like link clicks, click-through rate, and cost per link click.

Awareness → Prioritise reach, frequency, and ad recall lift.

The Big Picture

A/B testing on Meta isn’t about chasing quick wins; it’s about patience, consistency, and continually learning what truly resonates with your audience. While it takes time, those small insights can lead to significant results.

It’s a powerful way to identify underperforming ads, better understand your ideal customer, and get more from your ad spend. It’s the engine behind many successful Facebook ad campaigns.

If you’re looking for a fresh perspective or some guidance on your next test, feel free to get in touch. You can reach us via our webform or at company@snowballcreations.com.